AMD OpenAI Deal: Reshaping AI Hardware & Nvidia’s Dominance

The artificial intelligence landscape was just rocked by a seismic shift. In a landmark move announced in October 2025, OpenAI and AMD unveiled a multi-year, $100 billion strategic partnership. This deal isn’t just about buying chips; it’s a declaration of independence and a direct challenge to the status quo. OpenAI has committed to purchasing AMD’s next-generation Instinct GPUs, signaling a massive diversification away from its long-time primary supplier, Nvidia.

For investors, technologists, and market analysts, this agreement is the most significant development in the AI infrastructure race to date. It validates AMD as a formidable competitor in the high-stakes AI accelerator market and provides OpenAI with a critical second source for the compute power that fuels its models. Consequently, understanding the financial and competitive implications of this deal is essential for anyone involved in how to invest in AI.

The Context: Nvidia’s Unchallenged Reign and the Imperative to Diversify

To grasp the magnitude of this deal, one must first understand the market it disrupts. For years, Nvidia has held a near-monopoly on the GPUs used for training and running large-scale AI models. This dominance, as detailed by the Computer History Museum’s overview of the GPU, gave it immense pricing power and control over the supply chain. Companies like OpenAI were almost entirely dependent on Nvidia’s hardware, creating significant business risks.

The urgent need for diversification became a strategic imperative. A single-supplier model is not sustainable for building the massive “AI factories” of the future. As Reuters reports on supply chain complexities, geopolitical and logistical risks are ever-present. Therefore, OpenAI’s move is a calculated strategy to secure its supply, drive down costs, and foster a more competitive hardware ecosystem. This shift directly impacts the Nvidia stock forecast and the broader semiconductor stocks sector.

The $100 Billion Gambit: Deconstructing the AMD-OpenAI Partnership

This partnership is far more than a simple purchase order. It’s a deeply integrated alliance with several key components. Firstly, the financial commitment of $100 billion over multiple years ensures a stable and predictable revenue stream for AMD, allowing for long-term R&D investment. This is a massive boost for the AMD stock forecast. Secondly, the deal includes an option for OpenAI to acquire up to a 10% equity stake in AMD through warrants tied to purchase milestones, aligning the long-term interests of both companies.

The agreement centers on the deployment of up to 6 Gigawatts (GW) of AMD compute capacity, an almost unfathomable scale that highlights the immense energy and infrastructure needs of future AI. The hardware at the core is AMD’s upcoming Instinct MI450 series, slated for late 2026. This move provides a clear roadmap for OpenAI’s infrastructure and solidifies AMD’s position among the best AI stocks to watch. For a deeper look at AMD’s strategy, the video below offers excellent insights.

Technical Deep Dive: Why AMD’s MI450 and ROCm Matter

The choice of AMD’s hardware is not arbitrary. The Instinct MI450 series is built on a chiplet architecture, a design philosophy AMD has championed. This approach allows for greater scalability and cost-efficiency compared to traditional monolithic designs. Furthermore, as CNBC has noted in its analysis of AMD’s AI chips, the company is focusing on massive memory capacity, which is critical for handling ever-growing models like GPT.

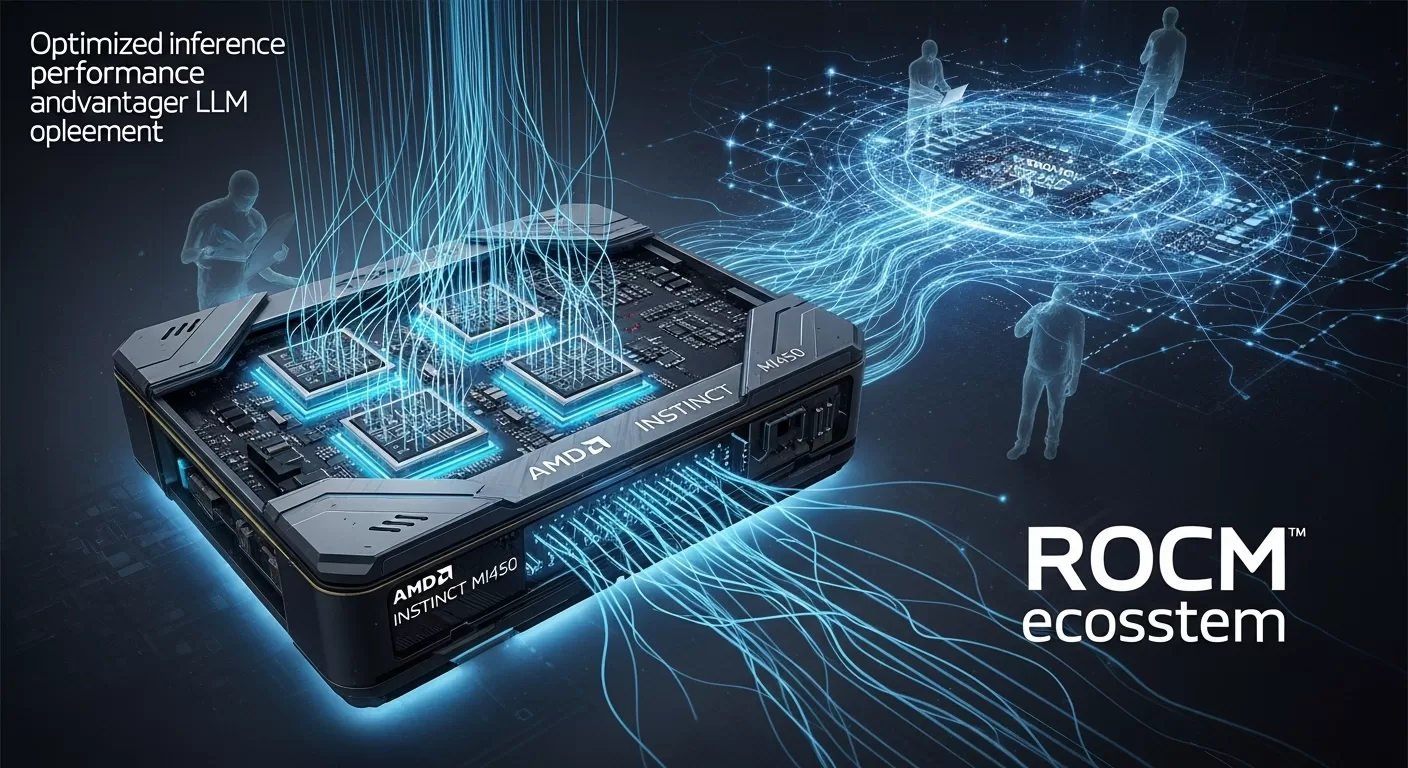

Perhaps most importantly, this deal is a massive vote of confidence in AMD’s ROCm software ecosystem. For years, Nvidia’s CUDA software has been the industry standard, creating a powerful moat. By committing to ROCm, OpenAI is helping to build a viable open-source alternative, which could encourage wider adoption. This directly addresses the question of what is a GPU‘s role in modern computing, expanding it beyond a single software paradigm. The table below compares the strategic approaches.

| Feature | AMD Instinct MI450 Series (Projected) | Nvidia Blackwell/Hopper Series |

|---|---|---|

| Architecture | Chiplet-based design for scalability and cost-efficiency | Primarily monolithic, optimized for raw performance |

| Performance Focus | Optimized for large model inference and memory capacity | Dominant in training performance, strong in inference |

| Software Ecosystem | ROCm (Open-source) | CUDA (Proprietary, extensive library support) |

| Strategic Advantage | Supply chain diversification, cost-effectiveness, open ecosystem | Incumbent market leader, deep software moat, broad adoption |

Market Shockwaves: Shifting Dynamics and Future Implications

The announcement sent immediate shockwaves through the financial markets. The deal validates AMD’s long-term strategy and introduces real competition into the AI hardware space. This is not just about OpenAI stock or its private valuation; it’s about the entire technology food chain. Other major AI labs and cloud providers will now look more seriously at AMD as a viable alternative, potentially eroding Nvidia’s market share over time. The Wall Street Journal has highlighted the staggering energy costs of AI, making AMD’s potential efficiency gains a key selling point.

This also impacts cloud computing stocks, as major providers like Microsoft, Amazon, and Google must now adapt their own hardware strategies. They may accelerate the development of their in-house chips or forge similar large-scale partnerships. The video below explores some of the broader financial implications for tech investors.

Expert Verdict: Navigating the New AI Hardware Frontier

The $100 billion AMD-OpenAI deal is a watershed moment. It marks the beginning of a multi-polar era in AI hardware, ending the period of near-total reliance on a single vendor. For CTOs and infrastructure engineers, this means more options, better pricing, and the ability to optimize hardware for specific workloads, such as inference versus training. For those trying to understand what is generative AI, it’s a clear signal of the massive infrastructural investments required.

For financial analysts and investors, the key takeaway is that the AI chip market is now a two-horse race. While Nvidia remains a formidable leader with its CUDA ecosystem, AMD is now a validated, well-funded challenger with the backing of the world’s most prominent AI company. The competitive dynamics have fundamentally and permanently changed. Monitoring the execution of this partnership, particularly the development and adoption of the ROCm software stack and the performance of the MI450 series, will be critical for anyone investing in the future of technology.

Frequently Asked Questions (FAQ)

What is the $100 billion AMD OpenAI deal?

It’s a multi-year strategic partnership where OpenAI commits to purchasing AMD Instinct GPUs (starting with the MI450 series in late 2026) for its AI supercomputing infrastructure, involving up to 6 Gigawatts of compute capacity. The deal also includes an option for OpenAI to acquire up to a 10% equity stake in AMD.

How does the AMD OpenAI deal impact Nvidia’s market dominance?

The deal represents a significant strategic countermove, indicating OpenAI’s intent to diversify its hardware supply beyond Nvidia. While Nvidia remains a leader, this partnership introduces a strong competitor, potentially shifting market share and fostering greater competition in the AI chip landscape, especially for inference workloads.

What are the key technical advantages of AMD’s MI450 GPUs in this deal?

The AMD MI450 GPUs are optimized for AI inference, offering high performance and memory capacity crucial for large language models. The deal also validates AMD’s open-source ROCm software ecosystem and its chiplet technology, which provides cost-efficiency and scalability benefits over monolithic designs.

Why is OpenAI diversifying its AI chip suppliers?

OpenAI is diversifying its suppliers to ensure supply security, reduce reliance on a single vendor (like Nvidia), achieve better cost-efficiency for its massive AI infrastructure, and leverage specialized hardware optimized for specific AI workloads like inference. This strategy is vital for building its “AI factories.”

What does the 6 Gigawatts (GW) deployment mean for AI infrastructure?

The commitment to deploy up to 6 Gigawatts of AMD compute capacity signifies an enormous scale of AI infrastructure build-out. This level of power consumption highlights the immense demand for AI processing power and the strategic importance of energy-efficient chip designs and diversified hardware sources for future AI development.